I became stuck in a sort of technological time warp, as a consequence of which I found myself developing a three-dimensional ultrasonic imaging system in the '70's which, with hindsight, had many of the attributes of what would now be called virtual reality. In the course of this I learned a good deal about the subjective, as well as the technical aspects of three-dimensional imaging, and developed an absorbing interest in what goes on in the eye-brain-tactile mechanisms of the observer.

3-D scanning finally came about largely as a matter of necessity imposed by patents. I was named as the inventor in the original Kelvin & Hughes patents on 2-D ultrasound contact scanning, which were pretty basic. However there was a long-running battle going on in the US courts between Smiths, and Automation Industries Inc of Denver, over the so-called "Firestone Patents" on industrial ultrasonic testing.

Smiths eventually lost the case in the US Supreme Court in the mid 'sixties, and as part of the settlement agreed to pull out of ultrasonics, and sell the Smiths ultrasonics patent portfolio to Automation. Kelvin & Hughes in Glasgow was shut down in 1967, but because of Ministry of Health pressure, the 2-D medical scanner line was preserved and sold off to Nuclear Enterprises in Edinburgh. They also received a "paid-up licence" under the "Brown Patents" as they became known, so that they could continue to make and sell the machines, but unfortunately nobody else in the UK shared that privilege.

I helped to get the business re-established within Nuclear Enterprises, but then moved on to a research job at Edinburgh University. I then wanted to go back into the ultrasound business, and by this time the new owners of the 2-D scanning patents were going round demanding royalties with menaces from everyone in the business.

I then found myself in the curious position of having to invent my way around my own basic patents. The only way I could see to do it was to invoke 3-D scanning. The words "....adapted to be moved in a plane" was the only weakness I could see in the generality of the original main claim. If I made a machine in which the probe was not constrained to move in a plane, then I was clear.

At the time there was a small company, Sonicaid Ltd, based at Bognor making fetal monitoring equipment, and very keen to get into medical imaging. We reached an agreement, and in late 1973 I took over a small factory unit in Livingston, near Edinburgh, and started building up a small team of people I'd known from the past, to develop a fully-featured 3-D medical scanning system.

"Real-time" scanning arrays were only just appearing, and so we started with a system based on a conventional single-transducer "probe", which could produce a highly-directional focused beam of very short ultrasonic pulses in the sub-millimetre wavelength range. We knew that if we wished to do so later on, we could replace it with a real-time array, provided we made provision for that in the basic design. The fundamental concept was that if we knew where the probe was, and where it was pointing in 3-D space relative to a fixed set of axes, and if we also knew the velocity of sound and how long it took for a pulse to travel to and from a target; then we had the basic data needed to define that target's position in 3-D space. There was no need for two probes, or cross-bearings or anything else, - pulse-echo systems are intrinsically three-dimensional, provided one does not throw the data away ! DEVELOPMENT

An except from an unpublished article on the 3D Multiplanar scanner that Tom Brown invented and marketed in 1975 * :

THE SONICAID VENTURE

Time Warp

Patents

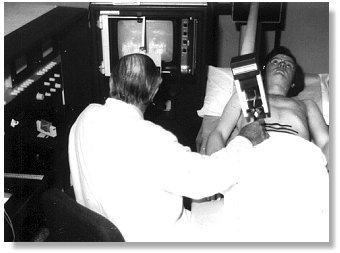

The Multiplanar scanner in use. The operator is viewing the stereoscopic image pair on the screen, via the prism stereoscope mounted in front of it. Note also the View Control Unit on the console desktop to his left.

The 3-D "Multiplanar" scanner development broke down into three main parts, each of which have their counterparts in VR. Firstly we needed a 3-D measuring system to track the probe and it's sound beam in 3-D space, in both position and orientation. Secondly we had to provide a display system which would present the 3-D data in some intelligible form. Thirdly we required a mechanism which interacted with the user so that he or she could modify the view they had of the synthetic 3-D environment we created.

There have been several attempts over the years to develop ultrasonic stereoscopic scanners, and we were certainly not the first. However the others didn't seem to get much further than one-off research projects, whereas we were, I think, the first to try to drive it all the way through to a marketable product.

MEASURING SYSTEM

We achieved the necessary freedom of probe movement by mounting the probe on gimbals on the end of a 4-foot "dipping-duck" counterbalanced boom. This was itself mounted on gimbals in the front of a measuring tank. A fine linkage mechanism transferred a mirror-image of all movements of the probe relative to the boom assembly, to a "wand", about the size of a match stick, inside the tank. In this way the probe could be moved around anywhere on the patient's skin, and pointed in any direction into the body, and the wand would reproduce these movements, in reverse, inside the tank.

The tank was an oil-filled anechoic chamber, on three sides of which were fixed arrays of receiving transducer tiles. The wand supported two small acoustic emitters, ("beads") which were effectively omni-directional point sources of acoustic pulses. The inner bead corresponded, in scale, to the position of the probe face. The outer bead corresponded to a point on the probe axis about 15cm away from the face.

When the inner bead was pulsed the times of flight of the spherical wavefront to the three orthogonal plate receivers were converted into voltages representing the X, Y & Z positions of the probe face. In a similar way pulsing the outer bead produced a further three voltages. The differences between the first and second sets of voltages corresponded to the "directional cosines", - proportional to the components of the sound pulse velocity along the three axes.

This relatively simple system thus gave us 6 signals, - 3 positional and 3 directional, - which when combined with time-of-flight and echo amplitude data, provided us with all we needed to drive the 3-D imaging system. I am sure we were by no means the only group to use a similar type of location system, - I know for example that Ray Brinker in St Louis used a similar system, but through air, to locate a hand-held transducer array on a patient, and there will doubtless have been others.

DISPLAY SYSTEM AND INTERACTIVE CONTROLS

The following describes the display arrangements as a series of "layers" of sophistication, as though they had been applied one after another. Of course it was not like that, but there was a kind of break point at one stage, when, after some water tank experiments, I came to the conclusion, - somewhat reluctantly, - that we would have to go all the way into stereoscopy.

Where I refer to the "display screen", we routinely used conventional electrostatic medium-persistence crt displays, variable-persistence storage displays, and scan-converters. Initially the scan converters were the Princeton analogue types, but later digital scan converters became available. Scan conversion had several advantages. The most obvious was that once the image was converted to raster-scan TV format, we could use all the available video display and recording technology. Until the advent of scan converters, the best grey-scale images were obtained by photographic integration from a conventional crt, - storage displays were poor by comparison. However the early analogue scan converters gave us quite pleasing grey-scale images directly, though I felt this was as much to do with image "softening" due to charge spreading in the storage matrix, than genuine grey-scale. Purpose-built 3-D displays like the Tektronix one were not yet available.

The first part of the display electronics generated three vectors representing the starting values, and components of the sound velocity along the primary co-ordinate axes of the system. Echo information was processed to compress the enormous dynamic range of received echoes into the limited grey scale available, while still preserving the definitive leading edges of pulses so far as humanly possible. It was then presented as brightness modulation of whatever display device was used.

If for example a simple crt display was used, then utilising the X and Y vectors as x and y timebases; and modulating the gun by echo information, would provide a "picture" of the patient's tissues as seen from a viewpoint along the remaining (Z) axis. In the same way any other pair of vectors could be used to present a picture from along the remaining axis.

Variable viewpoint projection

In practice, instead of putting the three vectors directly into a display device, we first put them through a two-stage co-ordinate transformation network, with which we could rotate the viewpoint in azimuth and in elevation. This now very familiar computer graphics trick was then a bit unusual though by no means unknown. Nowadays it would be done digitally, but in the 'seventies we used a pair of double-gang analogue sine/cosine potentiometers and some operational amplifiers to do the necessary computation.

This enabled us to choose any viewpoint on the surface of an imaginary sphere surrounding the patient, from which to build up a three-dimensional "view" of the tissues.

View Control Unit

The two sin/cos pots were mounted in a little desk-top gizmo which we called the "view control unit" (VCU) which is easy to demonstrate but hard to describe, which provided the user with a moveable "window" with an arrow through it, which could be panned and tilted to enable him to choose the "line of sight" he wanted.

The VCU could also be translated along three axes, driving linear encoders as is went, so defining the viewpoint position, in addition to the direction of view.

In this way the VCU performed part of the functions of a VR helmet, except that while the user could choose his viewpoint fairly intuitively, with some tactile feedback, it was by hand rather than by moving his head. Although helmet-mounted displays were already available for military purposes, they were out our reach, and we had to make do with console-mounted screens.

Viewing Field Truncation

Apart from controlling the viewpoint, the VCU had further controls within it to "gate" the echo signals, so that only those signals arising from a selected volume of space would be displayed. The viewable volume was defined as the space within six planes, two for each axis, equivalent to the walls of a box. In this way one could present all echoes from within a rectangular volume of tissue, or close it down to a thin layer to simulate a 2-dimensional scan.

"Range-gating" was also provided. This could be used, for example, to "peel off" the superficial layers of tissue to a selected depth below the skin surface.

Stereoscopy

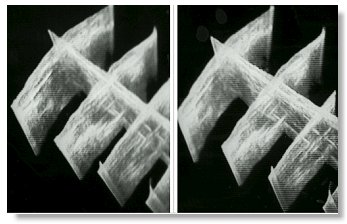

Demonstration composite image pair of transverse sections, intersected by a longitudinal scan. Stereoscopy is reversed to allow the images to be fused without having to use a stereoscope by the "cross-eyed" method, but only by those with that curious gift.

So far, the above describes a variable-projection system, but one which only projects a particular monocular projection of the data onto a flat screen. This was as far as I had originally intended to go, because even then I was wary of user reaction to stereoscopy.

The original design objective was not to make "solid" pictures of the tissues. There are just so many echo signals returned from the tissues that one would run into a "white-out" very quickly, What we aimed to do was to be able to superimpose multiple conventional 2-D "cuts" on the same screen, to form a composite image in which we could see the relationships between the (sampled) tissue structures. We found we could do this quite effectively, so long as we chose an oblique viewpoint from which the individual scans did not overlap. If scans did overlap on screen, then there was no way of distinguishing foreground from background echo signals

I ran some experiments with a prototype system, with a little plastic doll stuck in front of a bit of carpet tile in a bucket of water. This was an extreme example of the "overlapping" problem. I was so impressed with the way the image of the doll "jumped out" of the mass of echoes from the tile surface when viewed stereoscopically, and then disappeared completely when I shut one eye, that I realised that we just had to grasp the nettle of synthesised stereoscopic imaging.

Stereoscopy was actually implemented very simply. We provided two views of the same data from viewpoints separated horizontally by something close to the intra-ocular distance. In this case the two images were displayed by means of alternate frame switching on the two halves of a split screen.

An optical wedge stereoscope with a central shield plate was built onto the various display screens, so that when the operator looked into the space "behind" the screen, he saw a three-dimensional spatial image.

We found that these stereoscopes were most acceptable if they were "skeletal". There was a strong negative reaction to any kind of "hood" which prevented the user from also keeping an eye on what was going on round about him. Never put your customer's head in a box !

Perspective

The final level of processing simulated the effects of linear perspective, and removed the jarring artificiality which would have arisen if it were not provided. Analogue multiplier elements were employed to modulate the amplitude of displacements perpendicular to the mean viewing direction, in inverse proportion to the distance of the echoes away from the observer.

In this way structures close to the observer would subtend larger angles than ones further away, but because of the size-constancy mechanisms of the brain, would appear to be the correct relative sizes.

IMPLEMENTATION

Detailed design began in early 1974, on what I regarded as a fairly stout shoe-string. I do not mean to be disrespectful in any way, but we were a small company, and it was clear to me that we were unlikely to get "a second bite at the cherry". It had, so far as humanly possible, to be right first time, and anything we were likely to need in the future had to have provision for it built in at the outset. It did not matter if things had to be added later, but we could not expect the luxury of being able to do a basic re-design in the immediately-foreseeable future to accommodate things which we had failed to make provision for.

I was convinced that sooner or later we would want to use coherent processing of some sort or other, - for example to extract Doppler from echo returns, or to use coherent demodulation for some of the semi-holographic imaging techniques then being exploited for synthetic aperture systems such as sideways-looking radar.

Consequently the whole system was run coherently from a crystal clock, which synchronised all pulse-generation and display functions. However much of the display system used analogue elements such as operational amplifiers and multipliers, and of course the signal-frequency system had to be largely analogue in nature, though coherent detection was available if we wished to use it.

RESULTS AND MARKET REACTION

The system worked quite remarkably well. As the operator scanned the transducer around, he or she could "see" the sound beam waving around in three-dimensional space, "lighting up" reflecting structures inside the body, rather like a wartime searchlight illuminating enemy aircraft.

There was a satisfying "interactivity" between the movements imposed on the probe, and what was observed in the display. The user could "explore" the structures he was interested in a very real and satisfying way.

We could meet our original objective of building up composite scans involving many superimposed "slices" of tissue, and our machine was unique in being able to do it.

However the real pay-off came from a quite unexpected direction, and was to do with the constraints on resolution imposed by the physics involved.

By and large, the higher the frequency of the ultrasonic energy, - and so the shorter the wavelength, - the better the available resolution. However ultrasound is rapidly absorbed as it passes through tissue, and the shorter the wavelength the more rapidly this happens. There is a necessary trade-off between frequency (and hence resolution) and the range from which echo information can be obtained.

(WHY 3-D HELPS)

Unfortunately it is by no means as simple as that. The directionality of any radiating source is a function of the aperture/wavelength ratio. However out to a distance equal to the square of the aperture radius, divided by the wavelength, one is in the "Fresnel Zone", in which the distribution of sound energy is exceedingly complex, and the effective "beamwidth" approximates to the diameter of the source. Finally, the short pulses necessary for range resolution implies an extremely wide frequency spectrum, each component of which has its own directionality and Fresnel Zone. This has the effect of "smearing" all these otherwise calculable considerations over a wide range of wavelengths. One can also do a certain limited amount of beam shaping with acoustic lenses cemented to the transducer face.

What this all means is that obtaining the optimum resolution performance in an ultrasonic imaging system involves uneasy compromises between all sorts of conflicting factors, and comes close to being an art, rather than a science. Add to that the problems of compressing a dynamic range of echo responses in the order of at least 60db (probably nearer 100db) into the seven or so available grey levels in display devices while preserving as much of the pulse envelope data as possible, and one begins to realise that the designers "were living in interesting times".

From the user's point of view, - who is always looking to discern more and more detail, - it all means that he or she is usually working close to the achievable resolution of the system.

This is where the advantages of a genuinely three-dimensional system began to emerge. It appeared to have its greatest value in "making sense" of small structures which lay close to or below the otherwise achievable resolving power of the ultrasonic system

This is a difficult proposition to prove. Of course it did not actually enhance the "objective" resolution, which was determined by the usual factors such as bandwidth, wavelength, aperture etc. What it did seem to do was to enhance the operator's "understanding" of what he or she was looking at, and my best estimate was that it more-or-less doubled the "subjective" accessible resolution which the operator could use.

This has made me re-think how, and for what purpose, stereoscopy evolved in Nature. It is very much a "hard-wired" system, with split "wiring" to the retina, and all sorts of other major hardware components. Though different species vary in the extent of the stereoscopic field which they possess, stereoscopic vision is present in many creatures which have never really had much call for living in trees, or playing ball games. Though stereo vision does provide range-finding capability, I wonder if that may be a secondary, incidental benefit, rather then its prime purpose.

Perhaps stereoscopy, and all the richness of cross-correlation which goes on between our duplicated visual systems, is much less to do with swinging between the branches of trees, but much more to do with surviving in the half light when that indistinct smudge, right out on the limits of vision, might be prey, - but might also be a predator.

REAL-TIME SCANNING

The emergence of real-time scanners in the mid '70's marked a major sea change in the medical ultrasonics business. Except for a few specialist areas it effectively killed off the previous generation of "Static B-Scanners" of which the Multiplanar was a late example. These older static machines were characterised by having a single "searchlight" probe with a relatively low pulse repetition rate, which was moved over the skin building up an image as it went on some form of storage device. Such machines were capable of great registration accuracy, and the acoustic system could be honed to give the best possible resolution. They were also capable of "compounding", by which is meant the process of examining the same structure from multiple directions, and integrating the data so obtained. Since there can be quite a lot of spatial "noise" in the images, this provided the opportunity for some degree of integrating the genuine signals "up", and integrating noise "out".

Realtime scanners had an extended array, or oscillating, transducer and a high pulse-repetition rate, enabling the region below it to be imaged in real time. To begin with at least the real-time images were of relatively poor quality, and this was obvious as soon as a frame was "frozen". However the fact of the images being dynamic both in response to movements within the tissues, and in response to the transducer itself being moved around, led to an appearance of great detail.

It would be wrong to say that this appearance of detail in a moving image is wholly an illusion. Of course the brain cannot invent detail which is not there. However If we want to interrogate a scene with our eyes, we do not stay still. We move our heads or even our bodies in order to "see" it better. Of course in doing so we get some additional parallax information, but I don't for a moment believe that is the whole of the story.

When the neuro anatomy is explored it is found that the detection of movement appears to be a central part of the functionality, and is distributed throughout the various parts of the visual cortex. It is possible that in a real sense the brain somehow "infers" detail, - and genuine detail at that, - from images from which otherwise appear to be of poor quality, but which move.

If one is sceptical, one has only to look at the way the brain infers colours. We do not see colours directly. We see a calculated colour, which the brain derives not only from the wavelength mix of the direct light reaching the eye, but also by means of some neurological "multiple simultaneous equation" basis, from the "colour" of surrounding structures.

Whatever the reality, I have little doubt that a fixed image will always look worse than a moving one, irrespective of whether it is monocular or binocular, and irrespective of whether the motion comes from the observer or the thing observed.

We did anticipate real-time in the basic design of the Multiplanar scanner, and would have been able to put a real-time head on the end of the boom. We would have needed an extra bead in the acoustic encoder tank to define its axis, but had provided for that. The display system would have needed modifications, but not radical ones.

Had we been able to do all this, we would have had a real-time viewing system in which the user would have been able not only to see a moving image of the structures below the probe assembly, but to see where these were in space, and to sweep out volume images. We had even got to the stage of planning to pick up the patient's ECG, so that we could strobe the system with the patient's heartbeat to eliminate image blurring.

However none of that was to be. Somehow we had first to get a single-transducer version of the system onto the market, get it accepted medically, and turn the cash flow positive, - and we failed.

CONCLUSION

That is the story of the Multiplanar Scanner. With hindsight, I think it was a project undertaken before its time, - but then one never knows that at the outset. It was a project with considerable depth, in terms of the things we planned to do later, but of course much of that had to be concealed from current users. We were up-staged part-way through by the emergence and commercial success of real-time scanning, and we failed to change tack to exploit that market - as we could have done, - on our way.

The reaction of the users to 3-D imaging, - particularly the "opinion leaders" - was disappointing, but then we were seeking to make obsolete some of the very skills upon which they had built their reputations. Perhaps the most besetting weakness of the Multiplanar Scanner, from that point of view, was that it was essentially an "R-Theta" device, and it could never successfully pretend it was a Cartesian co-ordinate one. The ultrasound world of the day belonged to "Flat-Planers", and they prevailed.

I think we were also asking non-intuitive, deductive-reasoning, right-handed, left-brained successful people to fire up their right brains, and do things they had not done since childhood. Some would do it for a bit and become quite enthusiastic, but then after a day or two Left Brain would re-assert its authority, and its owner couldn't really explain to me why he had lost interest.

The man who spotted and exploited the "resolution enhancement" aspect was a wise old radiologist in Edinburgh, Bruce Young, with an honourable record of achievement in other areas of diagnostic medicine, and who used it amongst other things to follow the ripening of follicles in the ovaries of infertile women. He is living quietly in retirement now, still convinced that "3-D" was a good thing.

After the closure there was something like £250,000 of stock and work in progress left in the factory. Overtures were made to take it over on some sensible basis and try to re-finance the business, but these were firmly rejected.

At least one specimen machine survives. It forms part of a collection in the Huntarian Museum in Glasgow University, along with the original prototype 2-D scanner, the one and only fully-automatic scanner, and specimens of subsequent production 2-D machines. Apart from that, the whole project has vanished virtually without trace."

* From Mr. Tom Brown, personal communications.